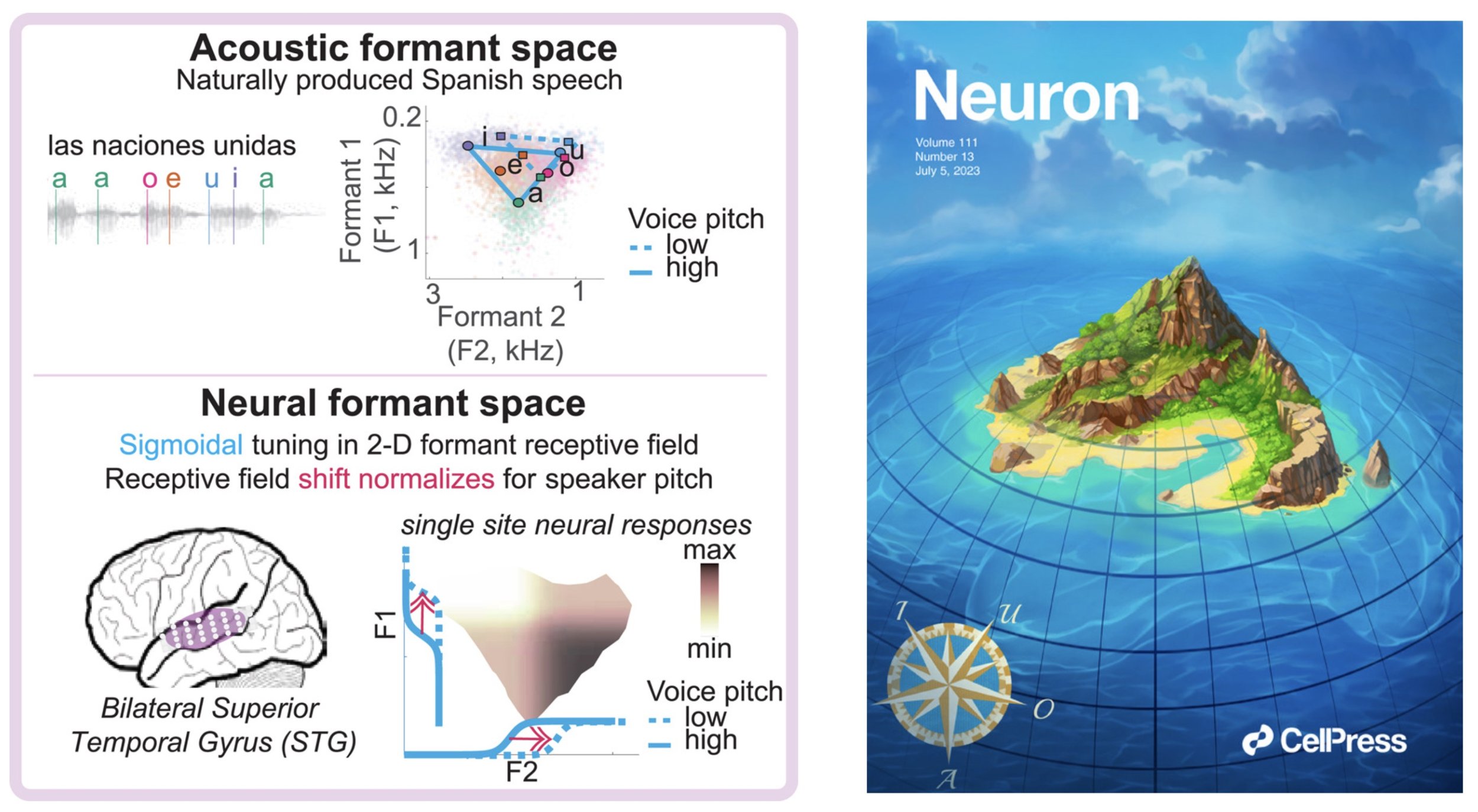

Vowel and formant representation in the human auditory speech cortex

Oganian, Y.*, Bhaya-Grossman, I.., Johnson, K., & Chang, E.F. (2023). Vowel and formant representation in the human auditory speech cortex. Neuron, 111(12): 2105-2118. doi:10.1016/j.neuron.2023.04.004.

Vowels, such as /a/ in ‘‘had’’ or /u/ in ‘‘hood,’’ are the essential building blocks of speech across all languages. Vowel sounds are defined by their spectral content, namely their formant structure. In this study, we use direct electrophysiological recordings from the human speech cortex to reveal that local two-dimensional spectral receptive fields underlie a distributed neural representation of vowel categories in the human superior temporal gyrus (STG). We find that neural tuning to vowel formants is non-linear, adjusts for a speaker's particular formant range, and extends beyond the naturally produced vowel space. Ultimately, this study reveals how the complex acoustic tuning to vowel formants in the human STG gives rise to phonological vowel perception.

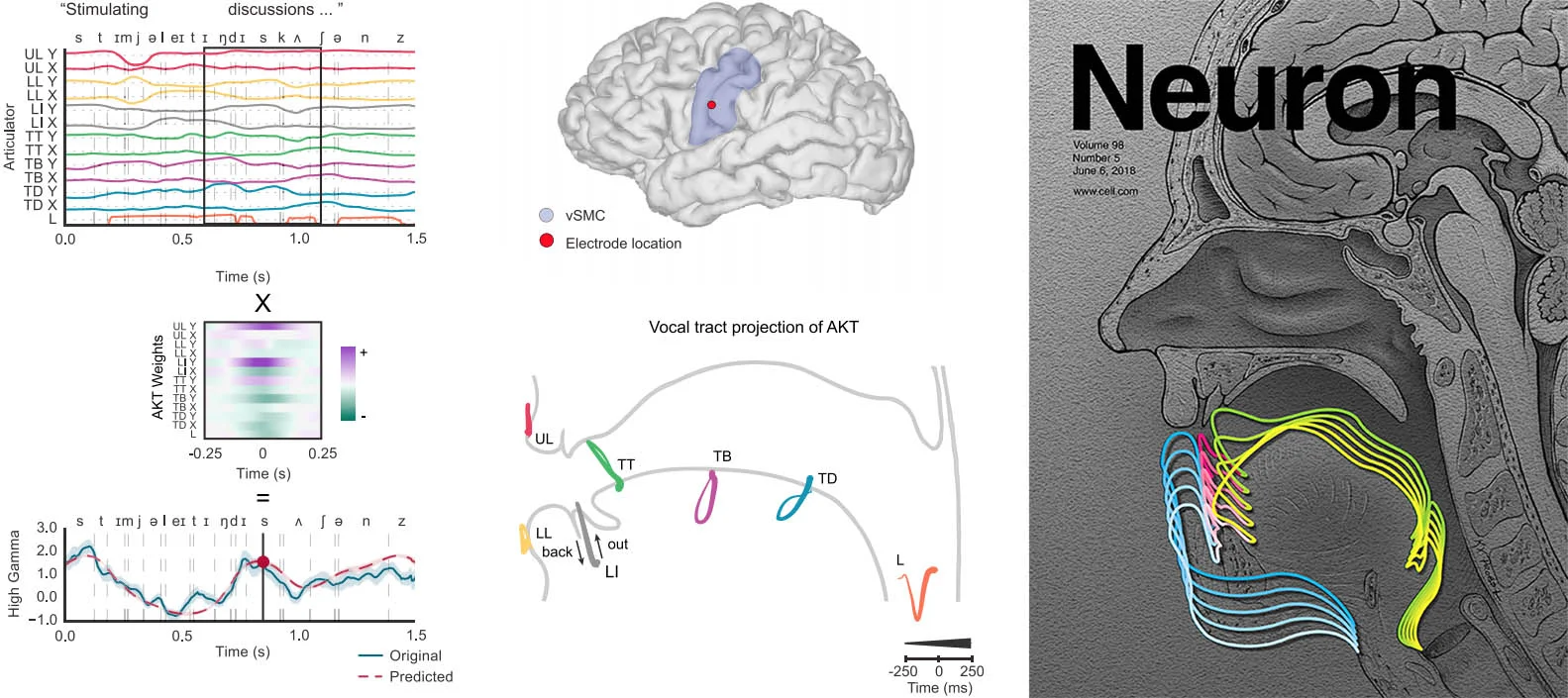

Encoding of Articulatory Kinematic Trajectories in Human Speech Sensorimotor Cortex

Chartier, J., Anumanchipalli, G.K., Johnson, K., & Chang, E.F. (2018). Encoding of Articulatory Kinematic Trajectories in Human Speech Sensorimotor Cortex. Neuron, 98(5): 1042-1054. doi:10.1016/j.neuron.2018.04.031.

When we speak, we engage nearly 100 muscles, continuously moving our lips, jaw, tongue, and throat to shape our breath into the fluent sequences of sounds that form our words and sentences. In our study, we investigated how these complex articulatory movements are coordinated in the brain. Participants with ECoG electrodes placed over a region of ventral sensorimotor cortex, a key center of speech production, read aloud a collection of 460 natural sentences that spanned nearly all the possible articulatory contexts in American English. We found that at single electrodes, neural populations encoded articulatory trajectories in which movements of the lips, jaw, tongue, and throat were coordinated together. Among the hundreds of electrodes we studied, we found that there was a diversity of different encoded movements that corresponded to every speech sound in the English language.

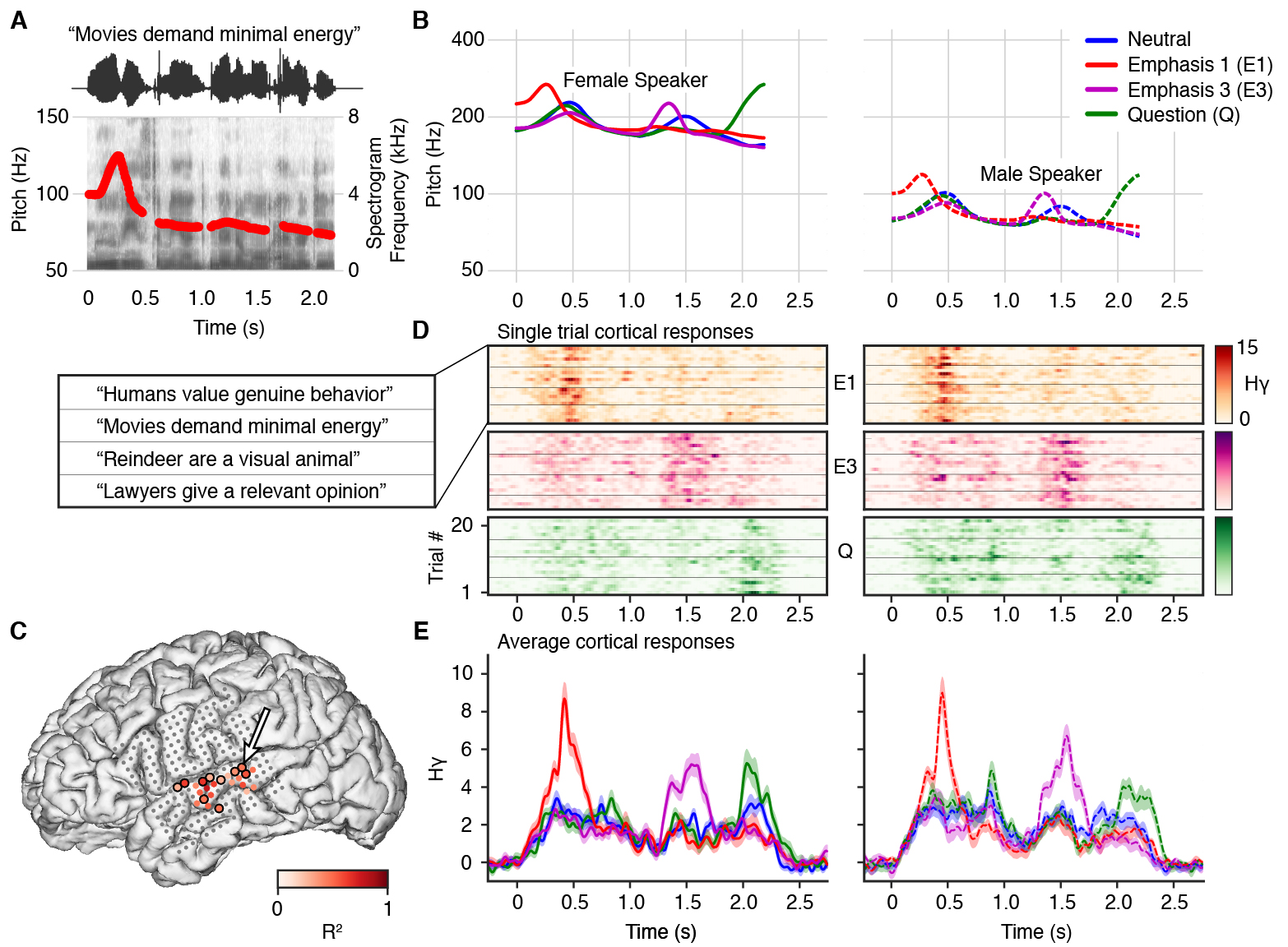

Intonational speech prosody encoding

Tang, C., Hamilton, L.S., & Chang, E.F. (2017). Intonational speech prosody encoding in the human auditory cortex. Science, 357(6353): 797-801. doi:10.1126/science.aam8577.

As people speak, they raise and lower the pitch of their voices to communicate meaning. For example, a person can ask a question simply by raising their pitch at the end of a sentence, “Anna likes to go camping?”. Although the use of pitch in languages is very important, it is not known exactly how a listener’s brain represents the pitch information in speech. Here, we recorded neural activity in human auditory cortex as people listened to speech with different intonation contours (or changes in pitch over the course of the sentence), different words, and different speakers. We found that some neural populations responded to changes in the intonation contour. These neural populations were not sensitive to the phonetic features that make up consonants and vowels and responded similarly to male and female speakers. We then showed that the neural activity could be explained by relative pitch encoding. That is, the amount of activity did not correspond to absolute pitch values. Instead, the activity represented speaker-normalized values of pitch, such that male and female speakers were considered on the same scale.

Leonard, M.K., Baud, M.O., Sjerps, M.J., & Chang, E.F. (2016). Perceptual restoration of masked speech in human cortex. Nature Communications, 13619. doi:10.1038/ncomms13619.

Social communication often takes place in noisy environments (restaurants, street corners, parties), yet we often don’t even notice when background sounds completely cover up parts of the words we’re hearing. Even though this happens all the time, it is a major mystery how our brains allow us to understand speech in these situations. While we recorded neural signals directly from the brain, participants listened to words where specific segments were completely removed and replaced by noise. For example, when they were presented with the ambiguous word “fa*ter” (where * is a loud masking noise, like a cough), they either heard the word “faster” or “factor”. Importantly, participants reported hearing the word as if the “s” or “c” was actually there. We found that brain activity in a specific region of the human auditory cortex, the superior temporal gyrus, is responsible for “restoring” the missing sound. When participants heard the same physical sound (e.g., “fa*ter) as either “factor” or “faster”, brain activity in the superior temporal gyrus acted as if the sound they perceived was actually present. Remarkably, we also found that using machine learning analyses, we could predict which word a listener would report hearing before they actually heard it. Brain signals in another language region, the inferior frontal cortex, contained information about whether a listener would hear “factor” or “faster”. It is as if the inferior frontal cortex decides which word it will hear, and then the superior temporal gyrus creates the appropriate percept.

Try it out for yourself:

Mesgarani, Cheung, Johnson, and Chang. Phonetic feature encoding in human superior temporal gyrus. Science 2014 Feb 28;343(6174):1006-10.

Speech is composed by elementary linguistic units (e.g. /b/, /d/, /g/, /i/, /a/…), which are called phonemes. In any given language, a limited inventory of phonemes can be combined to create a nearly infinite number of words and meanings. In contrast to most studies that focus on a small set of selected sounds, we determined the neural encoding for the entire English phonetic inventory. By comparing neural responses to speech sounds in natural continuous speech, we could determine that individual sites in the human auditory cortex are selectively tuned, not to individual phonemes, but rather to an even smaller set of distinctive phonetic features that have been long postulated by linguists and speech scientists. We discovered that neural selectivity to phonetic features is directly related to tuning for higher-order spectrotemporal auditory cues in speech, thereby linking acoustic and phonetic representations. We systematically determined the encoding for the major classes of all English consonants and vowels.

Chang, E.F., Rieger, J., Johnson, K.D., Berger, M.S., Barbaro, N.M., & Knight, R.T. (2010). Categorical speech representation in the human superior temporal gyrus. Nat Neurosci. 2010 Nov;13(11):1428-32.

A unique feature of human speech perception is our ability to effortlessly extract meaningful linguistic information from highly variable acoustic speech inputs. Perhaps the most classic example is the perceptual extraction of a phoneme from an acoustic stream. Phonemes are the key building blocks of spoken language and it has been hypothesized that a specialized module in the brain carries out this fundamental operation.

In this paper, we used a customized high-density electrode microarray with unparalleled temporal and spatial resolution to perform direct cortical recordings in humans undergoing neurosurgical procedures to address the neural basis of categorical speech perception of phonemes. The research question was simple: where and how are categorical phonemes (‘ba’ vs ‘da’ vs ‘ga’) extracted from linearly parameterized acoustics? Multivariate pattern classification methods revealed a striking patterning of temporal lobe neural responses that paralleled psychophysics. In essence, the superior temporal gyrus physiologically groups veridical sound representations into phoneme categories within 110 msec. These results establish the localization and mechanisms underlying how acoustics become transformed into the essential building blocks of language in the human brain.

Chang EF, Niziolek CA, Nagarajan SS, Knight RT, Houde JS. (2013). Human cortical sensorimotor network underlying feedback control of vocal pitch. Proc Natl Acad Sci U S A. 2013 Feb 12;110(7):2653-8.

When we speak, we also hear ourselves. This auditory feedback is not only critical for speech learning and maintenance, but also for the online control of everyday speech. When sensory feedback is altered, we make immediate corrective adjustments to our speech to compensate for those changes. In this study, we demonstrate how the human auditory cortex plays a critical role in guiding the motor cortex to make those corrective movements during vocalization.

We introduced a novel methodology of real-time pitch perturbation in awake, behaving clinical patients with implanted intracranial electrodes. Our recordings allowed an unprecedented level of spatiotemporal resolution to dissect sub-components of the auditory system at the single-trial level. We showed that the auditory cortex is strongly modulated by one’s own speech: perturbation of expected acoustic feedback results in a significant enhancement of neural activity in a posterior temporal region that is specialized for sensorimotor integration of speech. We found that this specific sensory enhancement was directly correlated with subsequent compensatory vocal changes.

Bouchard, Mesgarani, Johnson, Chang. Functional organization of human sensorimotor cortex for speech articulation. Nature 2013.

In this study, we recorded directly from the human cortical surface to answer precisely how we articulate. A major question that we address is how population neural activity gives rise to phonetic representations of speech. We first determined that the spatial organization of the speech sensorimotor cortex is laid out according to a somatotopic representation of the face and vocal tract (ABOVE). This is the first time this has been demonstrated during the act of speaking. The identification of several articulator representations itself, however, does not address the major challenge for speech motor control- that is, how do the speech articulators (lips, tongue, jaw, larynx) become precisely orchestrated to produce a simple syllables? To address this, we used recent methods for neural “state-space” analyses of the complex spatiotemporal neural patterns across the entire speech sensorimotor cortex. We demonstrate that the cortical state-space manifests two important properties-- it has a hierarchical and cyclical structure (BELOW). These properties may reflect cortical strategies to greatly simplify the complex coordination of articulators in fluent speech. Importantly, these results are consistent with an underlying organization reflecting the construct of a phoneme.